February 25th, 2019

Microsoft and the US Military

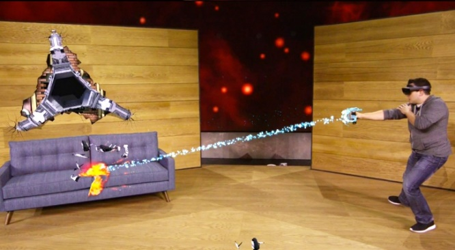

Recently, Microsoft formally announced the much-anticipated 2nd edition of their “HoloLens” headset, which allows the wearer to see virtual images superimposed on the real world. The first edition has been used widely – for example, a worker wearing the headset can be shown exactly which part of the machine they’re looking at needs attention, or a designer can try out furniture arrangements by placing virtual furniture within a real space.

This should have been a wholly triumphant moment for Microsoft, to announce the improvements they’ve made over the past 3 years. But some of the company’s employees took the opportunity to sound a sourer note. They disagreed with one particular contract, which will allow the US military to use HoloLens to train soldiers, and argued that “intent to harm is not an acceptable use of our technology”.

Today, Microsoft CEO Satya Nadella responded, making clear that he stood behind the military contract. "We made a principled decision that we're not going to withhold technology from institutions that we have elected in democracies to protect the freedoms we enjoy," he said, referring to an earlier blog post in which Microsoft Chief Counsel Brad Smith argued that "All of us who live in this country depend on its strong defense.”

I’m glad Microsoft chose to continue to support the US military. The employees, while they have a point, went too far by alleging that the military has any “intent to harm”. On the contrary, I believe the US military’s demonstrated purpose is to protect us from harm, and that they have protections in place to wage war justly.

That said, I’m not sure that Nadella drew the moral line in the correct place by arguing that our government’s actions are legitimate primarily because it is elected. After all, I believe we should all acknowledge that the US military has committed human rights violations in the past, such as the indiscriminate slaughter of the nuclear detonations at Hiroshima and Nagasaki. (If you haven’t already, I recommend reading “Black Rain”, an account of the aftermath in Hiroshima from the point of view of a civilian.) Democratic governance is often effective at preserving moral norms, but not always.

Brad Smith was closer to the mark when he wrote, “Deliberations about just wars literally date back millennia, including to Cicero and ancient Rome.” I wish he (and Microsoft leadership as a whole) had gone further and endorsed with some specificity a definition of a just war. An objective moral standard is the essential missing ingredient here, to avoid moral relativism which would encourage abuse to creep in under the excuse that “other countries do it”. It would be heartening to see Microsoft lay out what would happen if, for instance, the United States were to engage today in chemical, biological, or nuclear attacks that kill indiscriminately.

The protesting employees, meanwhile, ought to grapple with the full implications of their stance. If they believe that the US military is gravely immoral and can’t be supported, how do they justify living in the US at all? After all, their taxes pay for the military’s budget.

I think the employees’ best point came when they argued for greater transparency. I have no doubt that Microsoft faces the same temptations that other technology “supercompanies” do, to do business with the governments of China, Saudi Arabia, or other known human rights abusers. If Microsoft and Satya Nadella believe that democracy and open deliberation aids in moral outcomes in society at large, then couldn’t they help within the firm as well? I hope the vital conversation about gravely wrong actions, and the role of technology in aiding or preventing them, continues.

Sincerely,

David Smedberg